Clay figurines, also intended for a visual representation of the number of counted items, however, for convenience, they are placed in special containers. Such devices seem to have been used by merchants and accountants of that time.

Gradually, more and more complex devices were born from the simplest devices for counting: abacus (abacus), slide rule, mechanical adding machine, electronic computer. Despite the simplicity of early computing devices, an experienced accountant can get results with simple calculations even faster than a slow owner of a modern calculator. Naturally, by itself, the performance and counting speed of modern computing devices have long surpassed the capabilities of the most outstanding human calculator.

Early counting aids and devices

Mankind learned to use the simplest counting devices thousands of years ago. The most demanded was the need to determine the number of items used in barter. One of the simplest solutions was to use the weight equivalent of the exchanged item, which did not require an exact recalculation of the number of its components. For these purposes, the simplest balancing scales were used, which thus became one of the first devices for the quantitative determination of mass.

The principle of equivalence was widely used in another, familiar to many, the simplest counting devices Abacus or Abacus. The number of objects counted corresponded to the number of moved knuckles of this instrument.

A relatively complex device for counting could be a rosary used in the practice of many religions. The believer, as on the accounts, counted the number of prayers uttered on the beads of the rosary, and when passing a full circle of the rosary, he moved special grains-counters on a separate tail, indicating the number of counted circles.

With the invention of gears, much more complex calculation devices appeared. Antikythera mechanism, discovered at the beginning of the 20th century, which was found at the wreck of an ancient ship that sank around 65 BC. e. (according to other sources in or even 87 BC), even knew how to model the movement of the planets. Presumably it was used for calendar calculations for religious purposes, predicting solar and lunar eclipses, determining the time of sowing and harvesting, etc. The calculations were performed by connecting more than 30 bronze wheels and several dials; to calculate the lunar phases, differential transmission was used, the invention of which the researchers for a long time attributed no earlier than the 16th century. However, with the departure of antiquity, the skills of creating such devices were forgotten; it took about one and a half thousand years for people to learn how to create mechanisms similar in complexity again.

The Counting Clock by Wilhelm Schickard

This was followed by the machines of Blaise Pascal ("Pascaline", 1642) and Gottfried Wilhelm Leibniz.

ANITA Mark VIII, 1961

In the Soviet Union at that time, the most famous and widespread calculator was the Felix mechanical adding machine, produced from 1929 to 1978 at factories in Kursk (Schetmash plant), Penza and Moscow.

The advent of analog computers in the prewar years

Main article: History of analog computers

Differential analyzer, Cambridge, 1938

The first electromechanical digital computers

Z-series by Konrad Zuse

Reproduction of the Zuse Z1 computer at the Technique Museum, Berlin

Zuse and his company built other computers, each of which began with a capital letter Z. The most famous machines were the Z11, which was sold to the optical industry and universities, and the Z22, the first computer with magnetic memory.

British Colossus

In October 1947, the directors of Lyons & Company, a British company that owns a chain of shops and restaurants, decided to take an active part in the development of commercial computer development. The LEO I computer began operating in 1951 and was the first in the world to be used regularly for routine office work.

The University of Manchester machine became the prototype for the Ferranti Mark I. The first such machine was delivered to the university in February 1951, and at least nine others were sold between 1951 and 1957.

The second-generation IBM 1401 computer, produced in the early 1960s, occupied about a third of the world computer market, more than 10,000 of these machines were sold.

The use of semiconductors has made it possible to improve not only the central processing unit, but also peripheral devices. The second generation of data storage devices already made it possible to store tens of millions of characters and numbers. There was a division into rigidly fixed ( fixed) storage devices connected to the processor by a high-speed data transfer channel, and removable ( removable) devices. Replacing a disc cassette in a changer only took a few seconds. Although the capacity of removable media was usually lower, but their interchangeability made it possible to store an almost unlimited amount of data. Tape was commonly used for archiving data because it provided more storage at a lower cost.

In many second-generation machines, the functions of communicating with peripherals were delegated to specialized coprocessors. For example, while the peripheral processor is reading or punching punched cards, the main processor is performing calculations or program branches. One data bus carries data between memory and processor during the fetch and execution cycle, and typically other data buses serve peripherals. On the PDP-1, a memory access cycle took 5 microseconds; most instructions required 10 microseconds: 5 to fetch the instruction and another 5 to fetch the operand.

The history of development computer science

The development of computing technology can be divided into the following periods:

Ø Manual(VI century BC - XVII century AD)

Ø Mechanical(XVII century - mid-XX century)

Ø Electronic(mid XX century - present)

Although Prometheus in the tragedy of Aeschylus states: “Think what I did to mortals: I invented the number with it and taught letters to connect,” the concept of number arose long before the advent of writing. People have been learning to count for many centuries, passing on and enriching their experience from generation to generation.

Account, or more broadly - calculations, can be carried out in various forms: exists oral, written and instrumental counting . Instrumental account funds at different times had different possibilities and were called differently.

manual stage (VI century BC - XVII century AD)

The emergence of the account in antiquity - "This was the beginning of the beginnings ..."

The estimated age of the last generation of mankind is 3-4 million years. It was so many years ago that a man stood up and picked up a tool he made himself. However, the ability to count (that is, the ability to break down the concepts of "more" and "less" into a specific number of units) was formed in humans much later, namely 40-50 thousand years ago (Late Paleolithic). This stage corresponds to the emergence of modern man (Cro-Magnon). Thus, one of the main (if not the main) characteristic that distinguishes the Cro-Magnon man from the more ancient stage of man is the presence of counting abilities in him.

It is easy to guess that the first man's counting device was his fingers.

|

Fingers turned out greatcomputing machine. With their help, it was possible to count up to 5, and if you take two hands, then up to 10. And in countries where people walked barefoot, on the fingers it was easy to count up to 20. Then this was practically enough for most the needs of the people. Fingers turned out to be so closely connected with account that in ancient Greek the concept of "count" was expressed by the word"to quintuple". Yes, and in Russian the word "five" resembles "metacarpus" - part hands (the word "pastern" is now rarely mentioned, but its derivative is "wrist" - often used now). The hand, metacarpus, is a synonym and in fact the basis of the numeral "FIVE" among many peoples. For example, the Malay "LIMA" means both "hand" and "five". However, peoples are known whose units of account were not fingers, but their joints. |

Learning to count on fingersten, people took the next step forward and started counting by tens. And if some Papuan tribes could count only up to six, then others reached several tens in counting. Only for this it was necessary invite many counters at once.

In many languages, the words "two" and "ten" are consonant. Perhaps this is due to the fact that once the word "ten" meant "two hands". And now there are tribes that say"two hands" instead of "ten" and "hands and feet" instead of "twenty". And in England the first ten numbers are called by a common name - "fingers". This means that the British once counted on their fingers.

Finger counting has been preserved in some places to this day, for example, the historian of mathematics L. Karpinsky in the book "History of Arithmetic" reports that at the world's largest grain exchange in Chicago, offers and requests, as well as prices, are announced by brokers on their fingers without a single word.

Then came the counting with the shifting of stones, the counting with the help of a rosary ... This was a significant breakthrough in human counting abilities - the beginning of the abstraction of numbers.

Lecture No. 10. HISTORY OF THE DEVELOPMENT OF COMPUTING EQUIPMENT

1.1. INITIAL STAGE OF THE DEVELOPMENT OF COMPUTER EQUIPMENT

The need to automate data processing, including calculations, arose a very long time ago. It is believed that historically the first and, accordingly, the simplest counting device was the abacus, which belongs to hand-held counting devices.

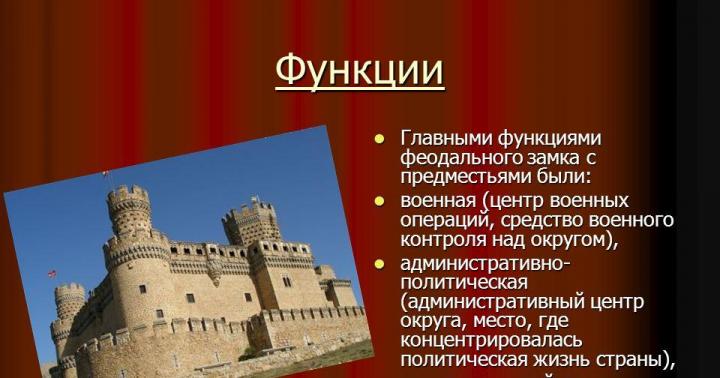

| The board was divided into grooves. One groove corresponded to ones, the other to tens, and so on. If more than 10 pebbles were accumulated in a groove during counting, they were removed and one pebble was added in the next category. In the countries of the Far East, the Chinese analogue of the abacus was widespread - suan pan(the account was based not on a ten, but on a five), in Russia - abacus. | Abacus | ||

| Suan pan. Laid 1930 | Accounts. Set 401.28 |

||

The first attempt that has come down to us to solve the problem of creating a machine that can add multi-digit integers was a sketch of a 13-bit adder developed by Leonardo da Vinci around 1500.

In 1642, Blaise Pascal invented a device that mechanically performs addition of numbers. Having familiarized himself with the works of Pascal and having studied his arithmetic machine, Gottfried Wilhelm Leibniz made significant improvements to it, and in 1673 he designed an adding machine that allows mechanically perform four arithmetic operations. Since the 19th century, adding machines have become very widespread and used. Even very complex calculations were performed on them, for example, calculations of ballistic tables for artillery firing. There was a special profession - a counter.

Despite a clear progress in comparison with the abacus and similar devices for manual counting, these mechanical computing devices required constant human intervention in the course of calculations. A person, making calculations on such a device, controls its work himself, determines the sequence of operations performed.

The dream of the inventors of computer technology was to create a counting automaton that, without human intervention, would make calculations according to a pre-compiled program.

In the first half of the 19th century, the English mathematician Charles Babbage tried to create a universal computing device - analytical engine, which was supposed to perform arithmetic operations without human intervention. The Analytical Engine was based on principles that have become fundamental to computer technology, and provided for all the main components that are available in a modern computer. Babbage's Analytical Engine was to consist of the following parts:

1. "Factory" - a device in which all operations for processing all types of data (ALU) are performed.

2. "Office" - a device that ensures the organization of the execution of the data processing program and the coordinated operation of all machine nodes during this process (CU).

3. “Warehouse” is a device designed to store initial data, intermediate values and data processing results (memory, or simply memory).

4. Devices capable of converting data into a form accessible to a computer (encoding). Input Devices.

5. Devices capable of converting the results of data processing into a form understandable to a person. output devices.

In the final version of the machine, it had three punched card input devices from which the program and data to be processed were read.

Babbage was unable to complete the work - it turned out to be too difficult based on the mechanical techniques of the time. However, he developed the basic ideas, and in 1943 the American Howard Aiken, based on the already 20th century technology - electromechanical relays- was able to build at one of the company's enterprises IBM such a machine called "Mark-1". Mechanical elements (calculating wheels) were used in it to represent numbers, and electromechanical elements were used for control.

1.2. THE BEGINNING OF THE MODERN HISTORY OF ELECTRONIC COMPUTING EQUIPMENT

A true revolution in computing occurred in connection with the use of electronic devices. Work on them began at the end of the 30s simultaneously in the USA, Germany, Great Britain and the USSR. By this time, vacuum tubes, which had become technical basis devices for processing and storing digital information have already been widely used in radio engineering devices.

A huge contribution to the theory and practice of creating electronic computing technology on initial stage its development was introduced by one of the greatest American mathematicians, John von Neumann. The "von Neumann principles" entered the history of science forever. The combination of these principles gave rise to the classical (von Neumann) computer architecture. One of the most important principles - the principle of a stored program - requires that the program is stored in the memory of the machine in the same way as it is stored in it. background information. The first computer with a stored program ( EDSAC ) was built in the UK in 1949.

In our country, until the 70s, the creation of computers was carried out almost completely independently and independently of the outside world (and this "world" itself was almost completely dependent on the United States). The fact is that electronic computing technology from the very moment of its initial creation was considered as a top-secret strategic product, and the USSR had to develop and produce it independently. Gradually, the secrecy mode softened, but even at the end of the 80s, our country could only buy obsolete computer models abroad (and the leading manufacturers - the USA and Japan - are still developing and producing the most modern and powerful computers in secrecy mode).

The first domestic computer - MESM ("small electronic computing machine") - was created in 1951 under the leadership of Sergei Alexandrovich Lebedev, the largest Soviet designer of computer technology. The record among them and one of the best in the world for its time was BESM-6 (“large electronic calculating machine, 6th model”), created in the mid-60s and for a long time the former basic machine in defense, space research, scientific and technical research in the USSR. In addition to machines of the BESM series, computers of other series were also produced - Minsk, Ural, M-20, Mir and others.

With the beginning of the serial production of computers, they began to conditionally divide them into generations; the corresponding classification is given below.

1.3. COMPUTER GENERATIONS

In the history of computer technology, there is a kind of periodization of computers by generations. It was originally based on the physical and technological principle: a machine is assigned to one or another generation depending on the physical elements used in it or the technology of their manufacture. The boundaries of generations in time are blurred, since at the same time cars of completely different levels were produced. When they give dates related to generations, they most likely mean the period industrial production; the design was carried out much earlier, and you can still meet very exotic devices in operation today.

At present, the physico-technological principle is not the only one in determining whether a particular computer belongs to a generation. The level of software, speed, and other factors should also be taken into account, the main of which are summarized in the attached table. 4.1.

It should be understood that the division of computers by generations is very relative. The first computers, produced before the beginning of the 50s, were "piece" products, on which the basic principles were worked out; there is no particular reason to attribute them to any generation. There is no unanimity in determining the signs of the fifth generation. In the mid-80s, it was believed that the main feature of this (future) generation is full implementation of the principles artificial intelligence . This task turned out to be much more difficult than it was seen at that time, and a number of specialists lower the bar for this stage (and even claim that it has already taken place). In the history of science there are analogues of this phenomenon: for example, after the successful launch of the first nuclear power plants in the mid-1950s, scientists announced that the launch of many times more powerful, cheap energy, environmentally friendly thermonuclear stations was about to happen; however, they underestimated the gigantic difficulties along the way, since there are no thermonuclear power plants to this day.

At the same time, among the machines of the fourth generation, the difference is extremely large, and therefore in Table. 4.1 the corresponding column is divided into two: A and B. The dates indicated in the top line correspond to the first years of the computer's production. Many of the concepts reflected in the table will be discussed in subsequent sections of the textbook; here we confine ourselves to a brief commentary.

The younger the generation, the clearer the classification features. Computers of the first, second and third generations today are at best museum exhibits.

What computers belong to the first generation?

To first generation usually include machines created at the turn of the 50s. Their schemes used electronic lamps. These computers were huge, uncomfortable and too expensive cars, which could only be acquired by large corporations and governments. Lamps consumed a huge amount of electricity and generated a lot of heat.

The set of instructions was small, the scheme of the arithmetic logic unit and the control unit is quite simple, software was practically absent. The RAM and performance scores were low. For I / O, punched tapes, punched cards, magnetic tapes and printing devices were used.

The speed is about 10-20 thousand operations per second.

But this is only the technical side. Another thing is also very important - the ways of using computers, the style of programming, the features of software.

Programs for these machines were written in the language of a specific machine. The mathematician who compiled the program sat down at the control panel of the machine, entered and debugged the programs, and made an account on them. The debugging process was the longest in time.

Despite the limited capabilities, these machines made it possible to perform the most complex calculations necessary for weather forecasting, solving problems of nuclear energy, etc.

Experience with the first generation of machines has shown that there is a huge gap between the time spent on developing programs and the time of computing.

Domestic machines of the first generation: MESM (small electronic calculating machine), BESM, Strela, Ural, M-20.

What computers belong to the second generation?

Second generation computer technology - machines designed around 1955-65. They are characterized by their use as electronic tubes, and discrete transistor logic elements. Their RAM was built on magnetic cores. At this time, the range of input-output equipment used began to expand, high-performance devices for working with magnetic tapes, magnetic drums and the first magnetic disks.

Performance- up to hundreds of thousands of operations per second, memory capacity- up to several tens of thousands of words.

The so-called languages high level , the means of which allow the description of the entire necessary sequence of computational actions in a visual, easy-to-understand way.

A program written in an algorithmic language is incomprehensible to a computer that only understands the language of its own instructions. Therefore, special programs called translators, translate the program from a high-level language into machine language.

A wide range of library programs has appeared for solving various mathematical problems. Appeared monitor systems, which control the mode of broadcasting and program execution. From monitor systems, modern operating systems later grew.

In this way, operating system is a software extension of the computer control device.

For some machines of the second generation, operating systems with limited capabilities have already been created.

Machines of the second generation were characterized software incompatibility, which made it difficult to organize large information systems. Therefore, in the mid-60s, there was a transition to the creation of computers that are software compatible and built on a microelectronic technological base.

What are the features of third generation computers?

Machines of the third generation were created approximately after the 60s. Since the process of creating computer technology was continuous, and it involved many people from different countries, dealing with the solution of various problems, it is difficult and useless to try to establish when the "generation" began and ended. Perhaps the most important criterion for distinguishing second and third generation machines is one based on the concept of architecture.

Third generation machines are families of machines with a common architecture, i.e. software compatible. As an element base, they use integrated circuits, which are also called microcircuits.

Third generation machines have advanced operating systems. They have multi-programming capabilities, i.e. simultaneous execution of several programs. Many of the tasks of managing memory, devices and resources began to be taken over by the operating system or directly by the machine itself.

Examples of third-generation machines are the IBM-360, IBM-370 families, ES computers (Unified computer system), SM computers (Family of small computers), etc.

The speed of machines within the family varies from several tens of thousands to millions of operations per second. The capacity of RAM reaches several hundred thousand words.

What is typical for fourth generation cars?

fourth generation is the current generation of computer technology developed after 1970.

Conceptually, the most important criterion by which these computers can be distinguished from third-generation machines is that fourth-generation machines were designed to effective use modern high-level languages and simplifying the programming process for the end user.

In terms of hardware, they are characterized by widespread use integrated circuits as an element base, as well as the availability of high-speed random access storage devices with a capacity of tens of megabytes.

From the point of view of the structure, the machines of this generation are multiprocessor and multimachine complexes, working on shared memory and common field of external devices. The speed is up to several tens of millions of operations per second, the capacity of RAM is about 1 - 64 MB.

They are characterized by:

- application personal computers;

- telecommunication data processing;

- computer networks;

- widespread use of database management systems;

- elements of intelligent behavior of data processing systems and devices.

What should be the fifth generation computers?

The development of subsequent generations of computers is based on large integrated circuits of increased degree of integration, using optoelectronic principles ( lasers,holography).

Development is also on the way "intellectualization" computers, removing the barrier between man and computer. Computers will be able to perceive information from handwritten or printed text, from forms, from a human voice, recognize the user by voice, and translate from one language to another.

In the fifth generation computers, there will be a qualitative transition from processing data to processing knowledge.

The architecture of computers of the future generation will contain two main blocks. One of them is traditional a computer. But now it's out of touch with the user. This connection is carried out by a block called the term "intelligent interface". Its task is to understand the text written in natural language and containing the condition of the problem, and translate it into a working program for a computer.

The problem of decentralization of computing will also be solved with the help of computer networks, both large, located at a considerable distance from each other, and miniature computers placed on a single semiconductor chip.

Generations of computers

| Index | Generations of computers |

|||||

| First 1951-1954 | Second 1958-I960 | Third 1965-1966 | Fourth | Fifth |

||

| 1976-1979 | 1985-? |

|||||

| Processor element base | Electronic lamps | transistors | Integrated circuits (IP) | Large ICs (LSI) | SverbigIS (VLSI) | Optoelectronics Cryoelectronics |

| RAM element base | cathode ray tubes | Ferrite cores | ferrite cores | BIS | VLSI | VLSI |

| Maximum RAM capacity, bytes | 10 2 | 10 1 | 10 4 | 10 5 | 10 7 | 10 8 (?) |

| Maximum performance processor (op/s) | 10 4 | 10 6 | 10 7 | 10 8 | 10 9 Multiprocessing | 10 12 , Multiprocessing |

| Programming languages | machine code | assembler | High-level procedural languages (HLL) | New procedural HLL | Non-procedural HLL | New non-procedural NEDs |

| Means of communication between the user and the computer | Control panel and punch cards | Punched cards and punched tapes | Alphanumeric terminal | Monochrome graphic display, keypad | Color + graphic display, keyboard, mouse, etc. | |

PC BASICS

People have always felt the need for an account. To do this, they used their fingers, pebbles, which they put in piles or arranged in a row. The number of objects was fixed with the help of dashes that were drawn along the ground, with the help of notches on sticks and knots that were tied on a rope.

With the increase in the number of items to be counted, the development of sciences and crafts, it became necessary to carry out the simplest calculations. The most ancient instrument known in various countries is the abacus (in Ancient Rome they were called calculi). They allow you to perform simple calculations on big numbers. The abacus turned out to be such a successful tool that it has survived from ancient times almost to the present day.

Nobody can name exact time and place of appearance of accounts. Historians agree that their age is several thousand years, and Ancient China, Ancient Egypt, and Ancient Greece can be their homeland.

1.1. SHORT STORY

DEVELOPMENT OF COMPUTER EQUIPMENT

With the development of the exact sciences, there was an urgent need to carry out a large number of exact calculations. In 1642, the French mathematician Blaise Pascal constructed the first mechanical calculating machine, known as Pascal's adding machine (Fig. 1.1). This machine was a combination of interconnected wheels and drives. The wheels were marked with numbers from 0 to 9. When the first wheel (ones) made a full turn, the second wheel (tens) was automatically activated; when it also reached the number 9, the third wheel began to rotate, and so on. Pascal's machine could only add and subtract.

In 1694, the German mathematician Gottfried Wilhelm von Leibniz designed a more advanced calculating machine (Fig. 1.2). He was convinced that his invention would be widely used not only in science, but also in everyday life. Unlike Pascal's machine, Leibniz used cylinders rather than wheels and drives. Numbers were applied to the cylinders. Each cylinder had nine rows of projections or teeth. In this case, the first row contained 1 ledge, the second - 2, and so on up to the ninth row, which contained 9 ledges. The cylinders were movable and brought to a certain position by the operator. The design of the Leibniz machine was more advanced: it was able to perform not only addition and subtraction, but also multiplication, division, and even taking a square root.

Interestingly, the descendants of this design survived until the 70s of the XX century. in the form of mechanical calculators (Felix type adding machine) and were widely used for various calculations (Fig. 1.3). However, already at the end of the XIX century. With the invention of the electromagnetic relay, the first electromechanical calculating devices appeared. In 1887, Herman Gollerith (USA) invented an electromechanical tabulator with number entry using punched cards. The idea to use punched cards prompted him to punch train tickets with a composter. The 80-column punched card developed by him did not undergo significant changes and was used as an information carrier in the first three generations of computers. Gollerite tabulators were used during the 1st census in Russia in 1897. The inventor himself then specially came to St. Petersburg. Since that time, electromechanical tabulators and other similar devices have become widely used in accounting.

At the beginning of the XIX century. Charles Babbage formulated the main provisions that should underlie the design of a fundamentally new type of computer.

In such a machine, in his opinion, there should be a "warehouse" for storing digital information, a special device that performs operations on numbers taken from the "warehouse". Babbage called such a device a "mill". Another device is used to control the sequence of operations, transferring numbers from the "warehouse" to the "mill" and vice versa, and finally, the machine must have a device for inputting initial data and outputting calculation results. This machine was never built - only its models existed (Fig. 1.4), but the principles underlying it were later implemented in digital computers.

Babbage's scientific ideas captivated the daughter of the famous English poet Lord Byron, Countess Ada Augusta Lovelace. She laid the first fundamental ideas about the interaction of various blocks of a computer and the sequence of solving problems on it. Therefore, Ada Lovelace is rightfully considered the world's first programmer. Many of the concepts introduced by Ada Lovelace in the descriptions of the world's first programs are widely used by modern programmers.

Rice. 1.1. Pascal summing machine

Rice. 1.2. Leibniz calculating machine

Rice. 1.2. Leibniz calculating machine

Rice. 1.3. Arithmometer "Felix"

Rice. 1.3. Arithmometer "Felix"

Rice. 1.4. Babbage's car

Rice. 1.4. Babbage's car

The beginning of a new era in the development of computer technology based on electromechanical relays was 1934. The American company IBM (International Buisness Machins) began producing alphanumeric tabulators capable of performing multiplication operations. In the middle of the 30s of the XX century. on the basis of tabulators, a prototype of the first local area network is created. In Pittsburgh (USA), a system was installed in a department store, consisting of 250 terminals connected telephone lines with 20 tabulators and 15 typewriters for settlements with customers. In 1934 - 1936 German engineer Konrad Zuse came up with the idea of creating a universal computer with program management and storing information in a storage device. He designed the Z-3 machine - it was the first program-controlled computer - the prototype of modern computers (Fig. 1.5).

Rice. 1.5. Zuse computer

It was a relay machine using the binary number system, with a memory of 64 floating point numbers. The arithmetic block used parallel arithmetic. The team included the operational and address parts. Data entry was carried out using a decimal keyboard, digital output was provided, as well as automatic conversion of decimal numbers to binary and vice versa. The speed of the addition operation is three operations per second.

In the early 40s of the XX century. in IBM laboratories, together with scientists from Harvard University, the development of one of the most powerful electromechanical computers was started. It was named MARK-1, contained 760 thousand components and weighed 5 tons (Fig. 1.6).

Rice. 1.6. Calculating machine MARK -1

The last largest project in the field of relay computing (CT) should be considered the RVM-1 built in 1957 in the USSR, which was quite competitive with the then computers in a number of tasks. However, with the advent of the vacuum tube, the days of electro mechanical devices were numbered. Electronic components had a great superiority in speed and reliability, which determined further fate electromechanical computers. The era of electronic computers has come.

The transition to the next stage in the development of computer technology and programming technology would be impossible without fundamental scientific research in the field of transmission and processing of information. The development of information theory is associated primarily with the name of Claude Shannon. Norbert Wiener is rightfully considered the father of cybernetics, and Heinrich von Neumann is the creator of automata theory.

The concept of cybernetics was born from the synthesis of many scientific areas: firstly, as a general approach to the description and analysis of the actions of living organisms and computers or other automata; secondly, from analogies between the behavior of communities of living organisms and human society and the possibility of their description using the general theory of control; and, finally, from the synthesis of information transfer theory and statistical physics, which led to the most important discovery relating the amount of information and negative entropy in the system. The term "cybernetics" itself comes from the Greek word meaning "pilot", it was first used by N. Wiener in the modern sense in 1947. N. Wiener's book, in which he formulated the basic principles of cybernetics, is called "Cybernetics or control and communication in animal and car.

Claude Shannon is an American engineer and mathematician who is considered the father of modern information theory. He proved that the operation of switches and relays in electrical circuits can be represented using algebra, invented in the middle of the 19th century. English mathematician George Boole. Since then, Boolean algebra has become the basis for analyzing the logical structure of systems of any level of complexity.

Shannon proved that any noisy communication channel is characterized by a limiting information transfer rate, called the Shannon limit. At baud rates above this limit, errors in transmitted information. However, using appropriate information encoding methods, one can obtain an arbitrarily small error probability for any channel noise level. His research was the foundation for the development of systems for transmitting information over communication lines.

In 1946, the brilliant American mathematician of Hungarian origin, Heinrich von Neumann, formulated the basic concept of storing computer instructions in his own internal memory, which served as a huge impetus for the development of electronic computing technology.

During World War II, he served as a consultant at the Los Alamos Atomic Center, where he worked on the calculations of the explosive detonation of a nuclear bomb and participated in the development of the hydrogen bomb.

Neumann owns works related to the logical organization of computers, the problems of the functioning of computer memory, self-reproducing systems, etc. He took part in the creation of the first electronic computer ENIAC, the computer architecture he proposed was the basis for all subsequent models and is still called so - "von Neumann".

I generation of computers. In 1946, work was completed in the USA on the creation of ENIAC, the first computer based on electronic components (Fig. 1.7).

Rice. 1.7. First computer ENIAC

The new machine had impressive parameters: it used 18 thousand electron tubes, it occupied a room with an area of 300 m 2, had a mass of 30 tons, and power consumption - 150 kW. The machine ran at a clock speed of 100 kHz and performed an addition in 0.2 ms and a multiplication in 2.8 ms, which was three orders of magnitude faster than relay machines could do. Shortcomings were quickly discovered new car. In terms of its structure, the ENIAC computer resembled mechanical computers: the decimal system was used; the program was typed manually on 40 typesetting fields; it took weeks to readjust the switching fields. During trial operation, it turned out that the reliability of this machine is very low: troubleshooting took up to several days. For input and output of data, punched tapes and punched cards, magnetic tapes and printing devices were used. In computers of the first generation, the concept of a stored program was implemented. Computers of the first generation were used for weather forecasting, solving energy problems, military tasks, and other important areas.

II generation of computers. One of the most important advances that led to the revolution in computer design and ultimately to the creation of personal computers was the invention of the transistor in 1948. The transistor, which is a solid-state electronic switching element (valve), takes up much less space and consumes much less energy. doing the same job as the lamp. Computing systems built on transistors were much smaller, more economical and much more efficient than tube ones. The transition to transistors marked the beginning of miniaturization, which made possible the emergence of modern personal computers (as well as other radio devices - radios, tape recorders, televisions, etc.). For machines of the second generation, the task of automating programming arose, since the gap between the time for developing programs and the actual time for calculating increased. The second stage in the development of computer technology in the late 50s - early 60s of the XX century. characterized by the creation of advanced programming languages (Algol, Fortran, Cobol) and the development of the process of automating the flow of tasks with the help of the computer itself, i.e. development of operating systems.

In 1959, IBM released a commercial machine based on the IBM 1401 transistors. It was delivered in more than 10 thousand copies. In the same year, IBM created its first large computer (mainframe) model IBM 7090, completely made on the basis of transistors, with a speed of 229 thousand operations per second, and in 1961 developed the IBM 7030 model for the US nuclear laboratory at Los Alamos.

A prominent representative of domestic computers of the second generation was the large electronic adding machine BESM-6, developed by S.A. Lebedev and his colleagues (Fig. 1.8). This generation of computers is characterized by the use of high-level programming languages that have been developed in the next generation of computers. Transistor machines of the second generation took only five years in the biography of computers.

Rice. 1.8. BESM-6

Rice. 1.8. BESM-6

III generation of computers. In 1959, engineers at Texas Instruments developed a way to place multiple transistors and other elements on a single support (or substrate) and connect these transistors without the use of conductors. So the integrated circuit (IC, or chip) was born. The first integrated circuit contained only six transistors. Now computers were designed on the basis of integrated circuits with a low degree of integration. Operating systems emerged to take on the task of managing memory, I/O devices, and other resources.

In April 1964, IBM announced the System 360, the first family of universal, software-compatible computers and peripherals. Hybrid microcircuits were chosen as the element base of the System 360 family, due to which the new models began to be considered third-generation machines (Fig. 1.9).

Rice. 1.9. COMPUTER III generation IBM

When you create an IBM System 360 family in last time allowed itself the luxury of releasing computers that were incompatible with the previous ones. The economy, versatility and small size of computers of this generation quickly expanded their scope - control, data transmission, automation of scientific experiments, etc. Within this generation, in 1971, the first microprocessor was developed as an unexpected result of Intel's work on the creation of microcalculators. (We note, by the way, that microcalculators in our time get along well with their "blood brothers" - personal computers.)

IV generation of computers. This stage in the development of computer technology is associated with the development of large and extra-large integrated circuits. IV generation computers began to use high-speed memory systems on integrated circuits with a capacity of several megabytes.

The four-bit Intel 8004 microprocessor was developed in 1971. The following year, an eight-bit processor was released, and in 1973, Intel released the 8080 processor, which was 10 times faster than the 8008 and could address 64K bytes of memory. It was one of the most serious steps towards the creation of modern personal computers. IBM released its first personal computer in 1975. The 5100 had 16 KB of memory, a built-in BASIC interpreter, and a built-in cassette tape drive that served as a storage device. The debut of the IBM PC took place in 1981. On this day new standard took his place in the computer industry. A large number of different programs have been written for this family. The new modification was called "extended" (IBM PC-XT) (Fig. 1.10).

Rice. 1.10. personal computer

IBM

PC

-

XT

Rice. 1.10. personal computer

IBM

PC

-

XT

Manufacturers abandoned the use of a tape recorder as an information storage device, added a second floppy drive, and a 20 MB hard drive was used as the main device for storing data and programs. The model was based on the use of a microprocessor - Intel 8088. Due to the natural progress in the development and production of microprocessor technology, Intel, a permanent partner of IBM, mastered the release of a new series of processors - Intel 80286. Accordingly, a new IBM PC model appeared. It was named IBM PC-AT. The next stage is the development of the Intel 80386 and Intel 80486 microprocessors, which can still be found today. Then the Pentium processors were developed, which are the most popular processors today.

V generation of computers. In the 90s of the XX century. great attention was paid not so much to improving the technical characteristics of computers as their "intelligence", open architecture and networking capabilities. Attention is focused on the development of knowledge bases, user-friendly interface, graphic tools presentation of information and development of macroprogramming tools. There are no clear definitions of this stage in the development of VT tools, since the elemental base on which this classification is based has remained the same - it is clear that all computers currently being produced can be attributed to the fifth generation.

1.2. CLASSIFICATION OF COMPUTERS

Computers can be classified according to a number of features, in particular, according to the principle of operation, purpose, methods of organizing the computing process, size and computing power, functionality, etc.

According to the principle of operation, computers can be divided into two broad categories: analog and digital.

Analog computers(analog computers - AVM) - continuous computers (Fig. 1.11).

Rice. 1.11. analog computer

Rice. 1.11. analog computer

They work with information presented in analog form, i.e. as a continuous series of values of some physical quantity. There are devices in which computing operations are performed using hydraulic and pneumatic elements. However, electronic AVMs, in which electrical voltages and currents serve as machine variables, are most widely used.

The work of AVM is based on the generality of laws describing processes of various nature. For example, the oscillations of a pendulum obey the same laws as changes in the electric field strength in an oscillatory circuit. And instead of studying a real pendulum, one can study its behavior on a model implemented on an analog computer. Moreover, this model can be used to study some biological and chemical processes that obey the same laws.

The main elements of such machines are amplifiers, resistors, capacitors and inductors, between which connections can be established, reflecting the conditions of a particular task. Programming of tasks is carried out by a set of elements on a type-setting field. The AVM most effectively solves mathematical problems containing differential equations that do not require complex logic. The results of the solution are displayed in the form of dependences of electrical voltages as a function of time on the oscilloscope screen or recorded by measuring instruments.

In the 40s - 50s of the XX century. electronic analog computers created serious competition for newly appeared computers. Their main advantages were high speed (commensurate with the speed of passage of an electrical signal through the circuit), clarity of presentation of simulation results.

Among the shortcomings, one can note the low accuracy of calculations, the limited range of tasks to be solved, and the manual setting of the task parameters. Currently, AVMs are used only in very limited areas - for educational and demonstration purposes, scientific research. They are not used in the practice of everyday life.

Digital computers(electronic computers - computers) are based on discrete logic "yes-no", "zero-one". All operations are performed by a computer in accordance with a pre-compiled program. The calculation speed is determined by the system clock speed.

According to the stages of creation and element base, digital computers are conventionally divided into five generations:

I generation (1950s) - computers based on electronic vacuum

lamps;

II generation (1960s) - computers based on semiconductor elements (transistors);

III generation (1970s) - computers based on semiconductor integrated circuits with low and medium degrees of integration (tens and hundreds of transistors in one package);

VI generation (1980s) - computers on large and ultra-large

integrated circuits - microprocessors (millions of transistors in one chip);

V generation (1990s - present) - supercomputers with thousands of parallel microprocessors,

allowing to build efficient systems processing huge

arrays of information; personal computers on ultra-complex microprocessors and user-friendly interfaces, which

determines their implementation in almost all areas of activity

person. Network technologies make it possible to unite computer users into a single information society.

In terms of computing power in the 70s - 80s of the XX century. the following systematization of computers has developed.

Supercomputers- These are computers with maximum capabilities in terms of speed and volume of calculations. They are used to solve problems of national and universal scale - national security, research in the field of biology and medicine, modeling the behavior of large systems, weather forecasting, etc. (Fig. 1.12).

Rice. 1.12. Supercomputer

CRAY

2

Rice. 1.12. Supercomputer

CRAY

2

Mainframes(mainframes) - computers that are used in large research centers and universities for research, in corporate systems - banks, insurance, trade institutions, transport, news agencies and publishing houses. Mainframes are combined into large computer networks and serve hundreds and thousands of terminals - machines on which users and clients directly work.

Mini computers- these are specialized computers that are used to perform a certain type of work that requires relatively large computing power: graphics, engineering calculations, working with video, layout of printed publications, etc.

Microcomputers- this is the most numerous and diverse class of computers, which is based on personal computers, which are currently used in almost all branches of human activity. Millions of people use them in their professional activity for interaction over the Internet, entertainment and recreation.

In recent years, a taxonomy has developed that reflects the diversity and characteristics of a large class of computers on which direct users work. These computers differ in computing power, system and application software, a set of peripheral devices, user interface and, as a result, size and price. However, all of them are built on common principles and a single element base, have a high degree of compatibility, common interfaces and protocols for data exchange between themselves and networks. The basis of this class of machines is personal computers, which in the above systematics correspond to the class of microcomputers.

Such a taxonomy, like any other, is rather arbitrary; Since it is impossible to draw a clear boundary between different classes of computers, models appear that are difficult to attribute to a particular class. And yet she is in general terms reflects the current diversity of computing devices.

Servers(from English serve - "serve", "manage") - multi-user powerful computers that ensure the functioning of computer networks (Fig. 1.13).

Rice. 1.13. Server

S

390

Rice. 1.13. Server

S

390

They serve to process requests from all workstations connected to the network. The server provides access to shared network resources - computing power, databases, software libraries, printers, faxes - and distributes these resources among users. In any institution, personal computers are combined into a local network - this allows for the exchange of data between computers of end users and rational use of system and hardware resources.

The fact is that preparing a document on a computer (whether it is an invoice for a product or a scientific report) takes much more time than printing it. It is much more profitable to have one powerful network printer for several computers, and the server will deal with the distribution of the print queue. If computers are connected to a local network, it is convenient to have a single database on the server - a price list of all store products, a work plan for a scientific institution, etc. In addition, the server provides a common Internet connection for all workstations, differentiates access to information for different categories of users, sets access priorities to shared network resources, maintains Internet usage statistics, monitors the work of end users, etc.

Personal Computer(PC - Personal computer) - this is the most common class of computers capable of solving problems of various levels - from the preparation of financial statements to engineering calculations. It is designed mainly for individual use (hence the name of the class to which it belongs). A personal computer (PC) has special tools that allow it to be included in local and global networks. The main content of this book will be devoted to the description of hardware and software tools this class of computers.

Notebook(from English notebook - “notebook”) - this well-established term completely incorrectly reflects the features of this class of personal computers (Fig. 1.14).

Rice. 1.14. Notebook

Rice. 1.14. Notebook

Its dimensions and weight correspond more to the format of a large book, and functionality and specifications are fully consistent with a conventional desktop (desktor) PC. Another thing is that these devices are more compact, lighter and, most importantly, consume much less electricity, which allows you to work on batteries. The software of this class of PC, from the operating system to application programs, is absolutely no different from desktop computers. In the recent past, this class of PC was defined as Laptop - "knee pad". This name reflected their features much more accurately, but for some reason it never caught on.

So, the main feature of laptop class personal computers is mobility. small dimensions and weight, monoblock design make it easy to place it anywhere in the workspace, move it from one place to another in a special case or briefcase of the "diplomat" type, and battery power - allows you to use it even on the road (car or plane).

All laptop models can be divided into three classes: universal, for business and compact (subnotebooks). Universal laptops are full replacements for a desktop PC, so they are relatively large and heavy, but at the same time they have a large screen size and a comfortable keyboard similar to a desktop PC. They have the usual built-in drives: CD-ROM (R, RW, DVD), hard drive and floppy drive. This design virtually eliminates the possibility of using it as a "road" PC. The battery charge is only enough for 2-3 hours of work.

Business laptops designed for use in the office, at home, on the road. They have significantly smaller overall dimensions and weight, a minimal set of built-in devices, but advanced means for connecting additional devices. PCs in this class serve more as an addition to an office or home desktop, rather than replacing them.

Compact laptops(subnotebooks) are the embodiment of the most advanced achievements of computer technology. They have the most a high degree integration various devices(The motherboard has built-in components such as support for audio, video, local network). Laptops of this class are usually equipped with wireless input device interfaces (additional keyboard, mouse), have a built-in radio modem for connecting to the Internet, compact smart cards are used as information storage devices, etc. At the same time, the mass of such devices does not exceed 1 kg, and the thickness is about 1 inch (2.4 cm). The battery charge is enough for several hours of operation, however, such computers are two to three times more expensive than conventional PCs.

Pocket personal computer(PDA) (RS - Rosket) - consists of the same parts as a desktop computer: processor, memory, sound and video system, screen, expansion slots that can be used to increase memory or add other devices. Battery power provides two months of operation. All these components are very compact and closely integrated, thanks to which the device weighs 100...200 g and fits in the palm of your hand, in a breast pocket of a shirt or a handbag (Fig. 1.15).

Rice. 1.15. Pocket personal computer

Rice. 1.15. Pocket personal computer

No wonder these devices are also called "handhelds" (Palmtop).

However, the functionality of a PDA is very different from a desktop computer or laptop. First of all, it has a relatively small screen, as a rule, there is no keyboard and mouse, so the interaction with the user is organized differently: the PDA screen is used for this - it is pressure sensitive, for which a special wand called a "stylus" is used. To type text on a PDA, the so-called virtual keyboard is used - its keys are displayed directly on the screen, and the text is typed with a stylus. Another important difference is the absence of a hard drive, so the volume of stored information is relatively small. The main storage of programs and data is built-in memory up to 64 MB, and flash memory cards perform the role of disks. These cards store programs and data that do not have to be placed in quick access memory (photo albums, music in MP3 format, e-books, etc.). Because of these features, PDAs are often paired with a desktop PC, for which there are special interface cables.

Notebook and PDA are designed to be completely different tasks, built on different principles and only complement each other, but do not replace.

They work with a laptop in the same way as a desktop computer, and the PDA is turned on and off several times a day. Loading programs and shutting down is almost instantaneous.

By technical specifications Modern PDAs are quite comparable to desktop computers that were released just a few years ago. This is quite enough for high-quality reproduction of text information, for example, when working with e-mail or a text editor. Modern PDAs are also equipped with a built-in microphone, speakers and headphone jacks. Communication with a desktop PC and other peripherals is via USB, infrared (IgDA) or Bluetooth (modern wireless interface).

In addition to a special operating system, PDAs are usually equipped with built-in applications, which include a text editor, a spreadsheet editor, a scheduler, an Internet browser, a set of diagnostic programs, etc. Recently, computers of the Pocket PC class have been equipped with built-in means of connecting to the Internet (a regular cell phone can also be used as an external modem).

Due to their capabilities, pocket personal computers can be considered not just as a simplified PC with reduced capabilities, but as a completely equal member of the computer community, which has its own undeniable advantages even compared to the most advanced desktop computers.

Electronic secretaries(PDA - Personal Digital Assistant) - have the format of a pocket computer (weighing no more than 0.5 kg), but are used for other purposes (Fig. 1.16).

Rice. 1.16. Electronic secretary

Rice. 1.16. Electronic secretary

They are oriented towards electronic directories, storing names, addresses and phone numbers, information about the daily routine and meetings, to-do lists, expense records, etc. An electronic secretary may have built-in text and graphic editors, spreadsheets and other office applications.

Most PDAs have modems and can communicate with other PCs and, when connected to a computer network, can receive and send e-mail and faxes. Some PDAs are equipped with radio modems and infrared ports for remote wireless communication with other computers. Electronic secretaries have a small liquid crystal display, usually housed in a flip cover of a computer. Manual input of information is possible from a miniature keyboard or using a touch screen, like a PDA. A PDA computer can only be called with great reservations: sometimes these devices are classified as ultra-portable computers, sometimes as “intelligent” calculators, others consider that this is more of an organizer with advanced features.

Electronic notebooks(from English organizer - "organizer") - belong to the "lightest category" of portable computers (their mass does not exceed 200 g). Organizers have a capacious memory in which you can write down the necessary information and edit it using the built-in text editor; can be stored in memory business letters, texts of agreements, contracts, daily routine and business meetings. The organizer has an internal timer that reminds you of important events. Access to information can be password protected. Organizers are often equipped with a built-in translator that has several dictionaries.

The output of information is carried out on a small monochrome liquid crystal display. Thanks to its low power consumption, battery power provides up to five years of storage without recharging.

Smartphone (English smartphone) is a compact device that combines the functions of a cell phone, electronic notebook and digital camera with mobile access to the Internet (Fig. 1.17).

Rice. 1.17. Smartphone

Rice. 1.17. Smartphone

The smartphone has a microprocessor, RAM, read-only memory; access to the Internet is carried out through channels cellular communication. The quality of the photographs is not high, but sufficient for use on the Internet and forwarding via e-mail. Video recording time is about 15 s. It has a built-in storage for smart cards. The battery charge is enough for 100 hours of operation. Weight 150 g. Very handy and useful device, but its cost is commensurate with the price of a good desktop computer.

As soon as a person discovered the concept of "quantity", he immediately began to select tools that optimize and facilitate counting. Today, super-powerful computers, based on the principles of mathematical calculations, process, store and transmit information - essential resource and engine of human progress. It is not difficult to get an idea of how the development of computer technology took place, having briefly considered the main stages of this process.

The main stages in the development of computer technology

The most popular classification proposes to single out the main stages in the development of computer technology in chronological order:

- Manual stage. It began at the dawn of the human epoch and continued until the middle of the 17th century. During this period, the foundations of the account arose. Later, with the formation of positional number systems, devices appeared (abacus, abacus, and later - a slide rule) that made it possible to calculate by digits.

- mechanical stage. It began in the middle of the 17th century and lasted almost until the end of the 19th century. The level of development of science during this period made it possible to create mechanical devices that perform basic arithmetic operations and automatically memorize the highest digits.

- The electromechanical stage is the shortest of all that the history of the development of computer technology unites. It lasted only about 60 years. This is the gap between the invention of the first tabulator in 1887 until 1946, when the very first computer (ENIAC) appeared. New machines, which were based on an electric drive and an electric relay, made it possible to perform calculations with much greater speed and accuracy, but the process of counting still had to be controlled by a person.

- The electronic stage began in the second half of the last century and continues today. This is the story of six generations of electronic computers - from the very first gigantic units based on vacuum tubes, to super-powerful modern supercomputers with a huge number of parallel processors capable of simultaneously executing many commands.

The stages of the development of computer technology are divided according to the chronological principle rather conditionally. At a time when some types of computers were used, the prerequisites for the emergence of the following were actively created.

The very first counting devices

The earliest counting tool that the history of the development of computer technology knows is ten fingers on a person’s hands. The results of the count were initially recorded with the help of fingers, notches on wood and stone, special sticks, and knots.

With the advent of writing, various ways of writing numbers appeared and developed, positional number systems were invented (decimal - in India, sexagesimal - in Babylon).

Around the 4th century BC, the ancient Greeks began to count using the abacus. Initially, it was a flat clay tablet with inscriptions on it. sharp object stripes. The count was carried out by placing small stones or other small objects on these strips in a certain order.

In China, in the 4th century AD, seven-point abacus appeared - suanpan (suanpan). Wires or ropes were stretched onto a rectangular wooden frame - from nine or more. Another wire (rope), stretched perpendicular to the others, divided the suanpan into two unequal parts. In the larger compartment, called "earth", five bones were strung on wires, in the smaller one - "heaven" - there were two of them. Each of the wires corresponded to a decimal place.

Traditional soroban abacus became popular in Japan from the 16th century, having got there from China. At the same time, abacus appeared in Russia.

In the 17th century, on the basis of logarithms discovered by the Scottish mathematician John Napier, the Englishman Edmond Gunther invented the slide rule. This device has been constantly improved and has survived to this day. It allows you to multiply and divide numbers, raise to a power, determine logarithms and trigonometric functions.

The slide rule has become a device that completes the development of computer technology at the manual (pre-mechanical) stage.

The first mechanical calculators

In 1623, the German scientist Wilhelm Schickard created the first mechanical "calculator", which he called the counting clock. The mechanism of this device resembled an ordinary watch, consisting of gears and stars. However, this invention became known only in the middle of the last century.

A qualitative leap in the field of computer technology was the invention of the Pascaline adding machine in 1642. Its creator, French mathematician Blaise Pascal, began work on this device when he was not even 20 years old. "Pascalina" was a mechanical device in the form of a box with a large number of interconnected gears. The numbers that needed to be added were entered into the machine by turning special wheels.

In 1673, the Saxon mathematician and philosopher Gottfried von Leibniz invented a machine that performed four basic mathematical operations and was able to extract the square root. The principle of its operation was based on the binary number system, specially invented by the scientist.

In 1818, the Frenchman Charles (Carl) Xavier Thomas de Colmar, based on the ideas of Leibniz, invented an adding machine that can multiply and divide. And two years later, the Englishman Charles Babbage set about designing a machine that would be capable of performing calculations with an accuracy of up to 20 decimal places. This project remained unfinished, but in 1830 its author developed another one - an analytical engine for performing accurate scientific and technical calculations. It was supposed to control the machine programmatically, and punched cards with different arrangements of holes should have been used for input and output of information. Babbage's project foresaw the development of electronic computing technology and the tasks that could be solved with its help.

It is noteworthy that the fame of the world's first programmer belongs to a woman - Lady Ada Lovelace (nee Byron). It was she who created the first programs for Babbage's computer. One of the computer languages was subsequently named after her.

Development of the first analogues of a computer

In 1887, the history of the development of computer technology came out on new stage. American engineer Herman Gollerith (Hollerith) managed to design the first electromechanical computer - tabulator. In its mechanism there was a relay, as well as counters and a special sorting box. The device read and sorted statistical records made on punched cards. AT further company, founded by Gollerith, became the backbone of the world famous computer giant IBM.

In 1930, the American Vannovar Bush created a differential analyzer. It was powered by electricity, and electronic tubes were used for data storage. This machine was able to quickly find solutions to complex mathematical problems.

Six years later, the English scientist Alan Turing developed the concept of the machine, which became theoretical basis for current computers. She had all the essentials. modern means computer technology: could step by step perform operations that were programmed in internal memory.

A year later, George Stibitz, a US scientist, invented the country's first electromechanical device capable of performing binary addition. His actions were based on Boolean algebra - mathematical logic created in the middle of the 19th century by George Boole: using the logical operators AND, OR and NOT. Later, the binary adder would become an integral part of the digital computer.

In 1938, an employee of the University of Massachusetts, Claude Shannon, outlined the principles of the logical structure of a computer that uses electrical circuits to solve Boolean algebra problems.

Beginning of the computer era

The governments of the countries participating in the Second World War were aware of the strategic role of computers in the conduct of hostilities. This was the impetus for the development and parallel emergence of the first generation of computers in these countries.

Konrad Zuse, a German engineer, became a pioneer in the field of computer engineering. In 1941, he created the first automatic computer controlled by a program. The machine, called the Z3, was built around telephone relays, and the programs for it were encoded on perforated tape. This device was able to work in the binary system, as well as operate with floating point numbers.

Zuse's Z4 was officially recognized as the first truly working programmable computer. He also went down in history as the creator of the first high-level programming language, called Plankalkul.

In 1942, American researchers John Atanasoff (Atanasoff) and Clifford Berry created a computing device that worked on vacuum tubes. The machine also used a binary code, could perform a number of logical operations.

In 1943, in an atmosphere of secrecy, the first computer, called "Colossus", was built in the British government laboratory. Instead of electromechanical relays, it used 2,000 electron tubes for storing and processing information. It was intended to crack and decrypt the code of secret messages transmitted by the German Enigma cipher machine, which was widely used by the Wehrmacht. The existence of this apparatus was kept a closely guarded secret for a long time. After the end of the war, the order to destroy it was personally signed by Winston Churchill.

Architecture development

In 1945, John (Janos Lajos) von Neumann, an American mathematician of Hungarian-German origin, created a prototype of the architecture of modern computers. He proposed to write the program in the form of code directly into the memory of the machine, implying the joint storage of programs and data in the computer's memory.

The von Neumann architecture formed the basis of the first universal electronic computer, ENIAC, being created at that time in the United States. This giant weighed about 30 tons and was located on 170 square meters of area. 18 thousand lamps were involved in the operation of the machine. This computer could perform 300 multiplications or 5,000 additions in one second.

The first universal programmable computer in Europe was created in 1950 in the Soviet Union (Ukraine). A group of Kyiv scientists, headed by Sergei Alekseevich Lebedev, designed a small electronic calculating machine (MESM). Its speed was 50 operations per second, it contained about 6 thousand vacuum tubes.

In 1952, domestic computer technology was replenished with BESM - a large electronic calculating machine, also developed under the leadership of Lebedev. This computer, which performed up to 10 thousand operations per second, was at that time the fastest in Europe. Information was entered into the machine's memory using punched tape, data was output by photo printing.

In the same period, a series of large computers were produced in the USSR under common name"Arrow" (author of the development - Yuri Yakovlevich Bazilevsky). Since 1954, serial production of the universal computer "Ural" began in Penza under the direction of Bashir Rameev. The latest models were hardware and software compatible with each other, there was a wide selection of peripherals, allowing you to assemble machines of various configurations.

Transistors. Release of the first mass-produced computers

However, the lamps failed very quickly, making it very difficult to work with the machine. The transistor, invented in 1947, managed to solve this problem. Using the electrical properties of semiconductors, it performed the same tasks as vacuum tubes, but it occupied a much smaller volume and did not consume as much energy. Along with the advent of ferrite cores for organizing computer memory, the use of transistors made it possible to significantly reduce the size of machines, make them even more reliable and faster.

In 1954, the American company Texas Instruments began to mass-produce transistors, and two years later, the first second-generation computer built on transistors, the TX-O, appeared in Massachusetts.

In the middle of the last century, a significant part of government organizations and large companies used computers for scientific, financial, engineering calculations, and work with large data arrays. Gradually, computers acquired features familiar to us today. During this period, graph plotters, printers, information carriers on magnetic disks and tape appeared.

The active use of computer technology has led to the expansion of its areas of application and required the creation of new software technologies. High-level programming languages have appeared that allow you to transfer programs from one machine to another and simplify the process of writing code (Fortran, Cobol, and others). Special programs-translators have appeared that convert the code from these languages into commands that are directly perceived by the machine.

The advent of integrated circuits

In the years 1958-1960, thanks to the engineers from the United States, Robert Noyce and Jack Kilby, the world became aware of the existence of integrated circuits. Based on a silicon or germanium crystal, miniature transistors and other components were mounted, sometimes up to hundreds and thousands. Microcircuits, just over a centimeter in size, were much faster than transistors and consumed much less power. With their appearance, the history of the development of computer technology connects the emergence of the third generation of computers.

In 1964, IBM released the first computer of the SYSTEM 360 family, which was based on integrated circuits. Since that time, it is possible to count the mass production of computers. In total, more than 20 thousand copies of this computer were produced.

In 1972, the ES (single series) computer was developed in the USSR. These were standardized complexes for work computer centers, which had a common command system. Based on the American IBM system 360.

The following year, DEC released the PDP-8 minicomputer, the first commercial project in this region. The relatively low cost of minicomputers made it possible for small organizations to use them as well.

During the same period, the software was constantly improved. Operating systems were developed to support the maximum number of external devices, new programs appeared. In 1964, BASIC was developed - a language designed specifically for training novice programmers. Five years later, Pascal appeared, which turned out to be very convenient for solving many applied problems.

Personal computers

After 1970, the release of the fourth generation of computers began. The development of computer technology at this time is characterized by the introduction of large integrated circuits into the production of computers. Such machines could now perform thousands of millions of computational operations in one second, and the capacity of their RAM increased to 500 million bits. A significant reduction in the cost of microcomputers has led to the fact that the opportunity to buy them gradually appeared in the average person.

Apple was one of the first manufacturers of personal computers. Steve Jobs and Steve Wozniak, who created it, designed the first PC in 1976, calling it the Apple I. It cost only $500. A year later, the next model of this company, the Apple II, was introduced.

The computer of this time for the first time became similar to a household appliance: in addition to its compact size, it had an elegant design and user-friendly interface. The spread of personal computers in the late 1970s led to the fact that the demand for mainframe computers dropped markedly. This fact seriously worried their manufacturer - IBM, and in 1979 it launched its first PC on the market.

Two years later, the company's first open architecture microcomputer appeared, based on the 16-bit 8088 microprocessor manufactured by Intel. The computer was equipped with a monochrome display, two drives for five-inch floppy disks, and 64 kilobytes of RAM. On behalf of the creator company, Microsoft specifically developed an operating system for this machine. Numerous clones of the IBM PC appeared on the market, which spurred the growth of industrial production of personal computers.

In 1984, Apple developed and released a new computer - the Macintosh. Its operating system was exceptionally user-friendly: it presented commands as graphical images and allowed them to be entered using the mouse. This made the computer even more accessible, since no special skills were required from the user.

Computers of the fifth generation of computer technology, some sources date 1992-2013. Briefly, their main concept is formulated as follows: these are computers created on the basis of super-complex microprocessors, having a parallel-vector structure, which makes it possible to simultaneously execute dozens of sequential commands embedded in the program. Machines with several hundred processors running in parallel allow for even more precise and fast processing of data, as well as the creation of efficient networks.

The development of modern computer technology already allows us to talk about computers of the sixth generation. These are electronic and optoelectronic computers running on tens of thousands of microprocessors, characterized by massive parallelism and simulating the architecture of neural biological systems, which allows them to successfully recognize complex images.